Motivation and Objectives

Integrated circuits validation during the development process is a hard task, because as stated before some standard specifications are hard to ensure due to the simulation time. R&D Product Development teams are always under pressure in relation to schedule, since a new product must arrive at the consumer market before or with a short time latency in relation to the competitors, due to that fact Development teams need to ensure a first time right. The easy way to ensure a first time right is to increase the verification, but some of the verification tasks can't be accelerated with the availability of more resources, since they are machine dependent instead of human dependent. There is always a solution, ... instead of use brute force let's use other approaches that ensure a correct validation within a reasonable time frame.

Product Development teams have also to face a restricted number of software licenses (software licenses are very expensive), this will determine the maximum number of parallel simulations that can run at the same time. Other problem related with the lack of licenses is that each new product usually demands a new verification environment. These two aspects have direct impact on the product development schedule plan.

Certification of a new product is done using a set of tools, including digital oscilloscopes. New oscilloscopes come with very good software for signal processing, but this software can only be executed under the oscilloscope, it will be good if such software were available for use within Linux/Windows PC environment, since this will help on the verification tasks.

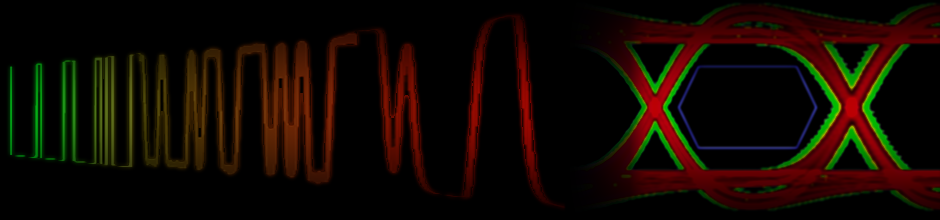

The scope of this work is to bring some of the signal processing tools to the verification environment. There are products, like USB2.0/3.0, that have available for download a software tool capable of analyzing the simulation waveforms and evaluate the circuit respects the standard specifications for interface signals. The major idea will be to create a similar software tool, without product limitations, capable of processing simulation saved files and evaluate if the previously defined parameters are met, like the signal processing software available inside the new digital oscilloscopes. It's also necessary to introduce new techniques for signal evaluation, in order to reduce the simulation time. On this work, Statistical Eye Diagram technique will be added to the software verification tool. The proposed software will add new features and will be open source code, this will allow the users to improved the existent libraries and the addition of new ones. The main characteristics are:

- Proposed software will allow the end user to characterize and estimate the full ser/des architecture prior to the block implementation. This will reduce the number of simulation steps and the number of silicon re-spins

- Silicon characterization without using third-part software will be allowed. User will need to use an oscilloscope to capture data, the data processing steps will be done of the proposed software. The need for third part characterization software will decrease

- Proposed software will provide to the final user a set of functions/lybraries to help on jitter characterization/decomposition (Random Jitter, Periodic Jitter, Data Dependent Jitter)

- Based on short but accurate transistor level simulations, silicon behavior will be accurately estimated

- Secondary capabilities (nice to have)

- Characterize hdmi/usb cables in terms of s-parameters

- Characterize hdmi/usb cables in terms of noise characteristics

- Clock recovery unit

- Eye diagram generator

- Statistical eye diagram generator

- Analog to digital and digital to analog conversion

- Measurement units

- Rise and Fall times measurement

- Minimum and Maximum values of the analog signal for each digital representation

- Waveform generator

- Data generation

- Internally generated

- Extracted from user .csv files

- Random noise and duty cycle generation

- Cable emulator

- Equalization